by Adam Kell

Transportation is about to hit exponential changes unlike anything we’ve ever seen before.

Today’s car is essentially a computer on wheels. Under the hood, you’ll find a complex computer network communicating with several sensors. These can detect a variety of issues like tire pressure, acceleration, and engine oil quality, while also allowing controls for things like speed, temperature, power doors and power windows.

Emissions sensors in automobiles got their start in direct consequence to government regulation. After the EPA set forth more stringent policies for exhaust emissions, it became standard for cars in the United States to be equipped with catalytic converters. By the 1980’s, oxygen sensors were pivotal to making modern day emissions control possible by detecting and diagnosing the oxygen-to-fuel ratio expelled through the exhaust.

Soon after, similar components such as the oil level sensor, tire pressure sensor, fasten seatbelt light, and the check engine light were invented — designed to notify the driver when an issue was present. These sensors and indicators have been instrumental in making automobiles more reliable, mainstream, and affordable by helping consumers avoid many destructive, costly, or dangerous maintenance issues.

The advances in the transportation industry over the next 10 years will vastly eclipse the changes over the past half century.

These changes will not only improve the overall driving experience — the next 10 years will involve an ecosystem overhaul, such that getting from point A to point B will be unrecognizable from driving today. The intelligent autos and infrastructure of the 21st century will actively suggest, or in many cases take control of the car to protect against potential accidents and distracted driving, while providing real-time route planning and active traffic management.

So what’s changed?

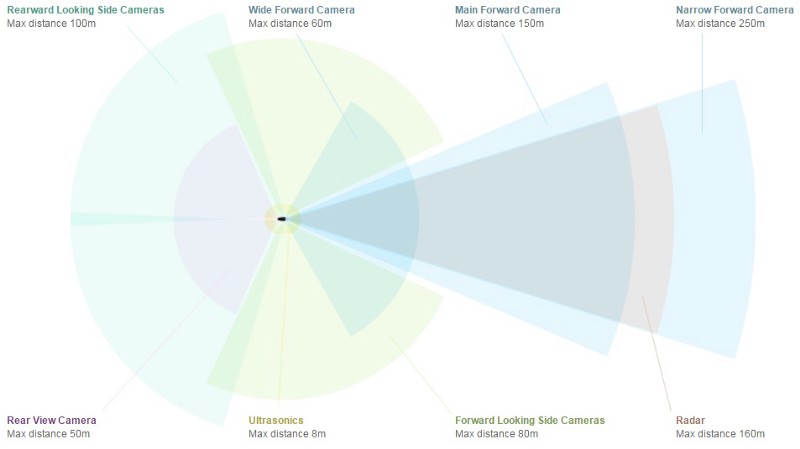

Onboard automotive sensors are pushing boundaries of perception, and doing so cheaper than ever — cars can sense more.

In previous years, the automotive industry has been hacking together hand-me-down sensors from other industries. But the scale and promise of autonomous cars has made even the most conservative automotive suppliers invest heavily into the dedicated autonomous vehicle sensor supply chain. Precision location sensors and services are becoming increasingly prevalent and pushing the boundaries of accuracy. Lidar, cameras, depth sensors, and radar are also fundamentally changing the perception benchmarks of vehicles. These sensors, together, will be the key to unlocking new levels of autonomy in the coming years.

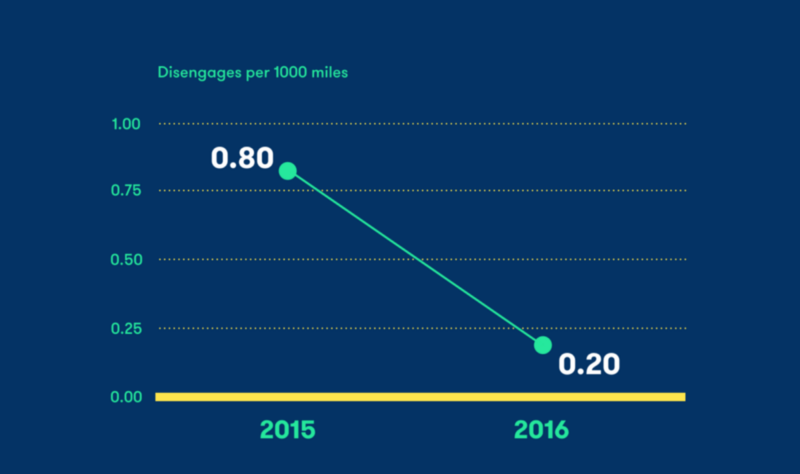

Autonomy systems and the related infrastructure are getting a lot more sophisticated — cars can know more.

Waymo’s autonomous miles driven now number in the millions, and at a high level of autonomy. Tesla’s autopilot odometer (depending on who you ask) reads in the hundreds of millions or billions of miles, albeit at a lower level of autonomy. These companies are leading the charge to the autonomous future, but there is a large supporting cast that will make autonomy possible.

Companies focused on generating and compressing high-res, high definition maps are making it less computationally-intensive to solve perception problems onboard the vehicle. Tools and frameworks are being developed to make it easier to tag and annotate images.

Computer simulations are getting closer to having the ability to train the underlying neural networks without fully relying on real cars in the physical world to uncover edge cases.

Kits are being developed to increase the feature-set of stock vehicles like adaptive cruise control and lane keeping, as well as developing a corpus of training to teach AI how humans actually drive.

Dedicated hardware is making tasks like vision more efficient by being designed to run specific algorithms in a very efficient way.

Infrastructure upgrades for new requirements in connectivity and communication — cars can talk to each other and the environment.

As autonomous vehicles move to become a viable option for mainstream adoption, major infrastructure enhancements need to be considered and implemented. Infrastructure upgrades range in scope — from painting new, clearer lines that designate between lane separation, all the way to integrating new sensors and communication modules. Autonomous cars need to be able to perceive enough information about their environment in order to assess, make a plan, and then react. The way the infrastructure has been currently portraying information to human drivers isn’t necessarily the best way to portray this information directly to vehicles. For humans, we use paint in different colors, signs and signals, cones, and flares. For autonomous vehicles, these inputs will involve an environment which can know a lot more information about the conditions on the road — sensors monitoring traffic, optimizing traffic flow, and even cars that can communicate with each other. How much of of the autonomous future will have infrastructure 2.0, and how much of our autonomous systems will adapt to something closer to current infrastructure?

Intelligent manufacturing is making it possible to integrate new technology, build in new ways, and with new materials.

The way cars themselves are made is also being transformed by automation. New materials can be selected with optimal characteristics based on their physical, chemical, and thermal constraints. These new materials can be put together in more clever ways using AI to design structural elements in very non-intuitive ways. New developments are making it cheaper and faster to build prototype parts from production materials. New applications of computer vision and machine learning techniques, like reinforcement learning, are pushing the boundaries of the types of parts that can be automated in the factory. The confluence of these factors is changing the way that automobiles are built and tested.

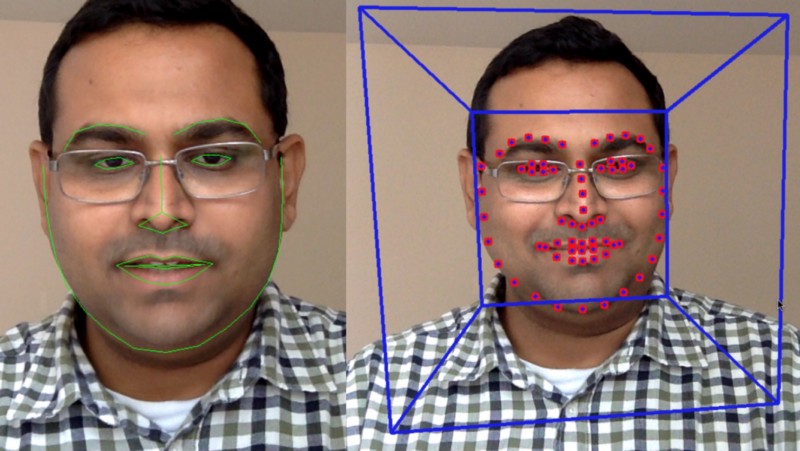

Driver-focused sensors are immensely important in the transition from level 0 to level 5 autonomous systems.

As an increasing number of conditions are created in which the driver may not be the main operator of the vehicle, systems are necessary to make sure the driver is paying attention when they need to be. Monitoring distractions, emotional state, sobriety, wakefulness, and health are all things becoming possible to track using only a camera and software. Other sensors measuring biometrics of the driver can also provide insights about what the car should do. Integrating other health data (risk for heart attack or stroke, for example) may change the way the car behaves in certain situations. All of these capabilities focus on keeping people safer, and the cars being more contextually aware.

New services in fleet management, ridesharing, and repair will become possible with so many connected and intelligent automobiles.

The combination of cars being largely autonomous as well as sensor-laden will enable many new business models. Fleet management will become much more efficient since the cars will be able to communicate real-time status and be rerouted as situations change.

Ridesharing will continue to make strides in the efficiency of route planning started by companies like Uber. The car repair industry will be similarly transformed because we will know so much more about what is happening inside a car.

Car ownership itself may change. On average, car owners currently leave their car parked for 95% of the time. A ride sharing company dispatching autonomous vehicles could displace the need or desire to own a car. Ubiquitous real-time ridesharing (even pre-automation) is already yielding huge conversions away from car ownership in urban areas.

All these factors are leading to a transportation revolution, however, new entrants to the transportation industry face a much more complicated regulatory system, customer development process and supply chain. Comet Labs’ Transportation Lab helps startups developing the core technologies that will transform the transportation industry, accelerating their customer development by providing resources that money can’t buy (such as HD mapping data, autonomous test vehicles, and space to pilot).

Are you building transportation technology? We’d love to hear from you.